Power Points:

- Recently, the FTC sent a warning to over 700 brands and retailers regarding penalties for fake endorsements and reviews.

- Content moderation is an essential tool for ensuring that user-generated content — including reviews, photos, videos, and Q&A — is authentic and fraud-free.

- Follow these best practices to prevent fake reviews

If you partner with influencers, you’ve likely become aware of the recent announcement the Federal Trade Commission (FTC) sent to over 700 brands and retailers regarding penalties for fake endorsements and reviews. While this notice primarily references influencer content and testimonials, you may be wondering how it applies to your customer reviews.

Today, nearly all shoppers depend on user generated content (UGC) — including reviews, Q&A, photos and videos — to make informed purchase decisions.

For this reason, every brand and retailer wants to have a positive ratings and reviews footprint. It can therefore be tempting to selectively block negative reviews or manufacture positive review content.

Don’t even think about it. It’s a very bad idea. And again for anyone at the back who didn’t hear: DON’T DO IT. Why? Number one: it’s completely unethical and goes against the whole ethos of why shoppers read reviews in the first place when making purchase decisions. Number two: sooner or later, shoppers will figure it out. And when they do, they won’t be happy.

Ask yourself: Do you really want to jeopardize consumer trust and your brand equity? Artificially manufacturing your review footprint is simply not a long-term strategy for success.

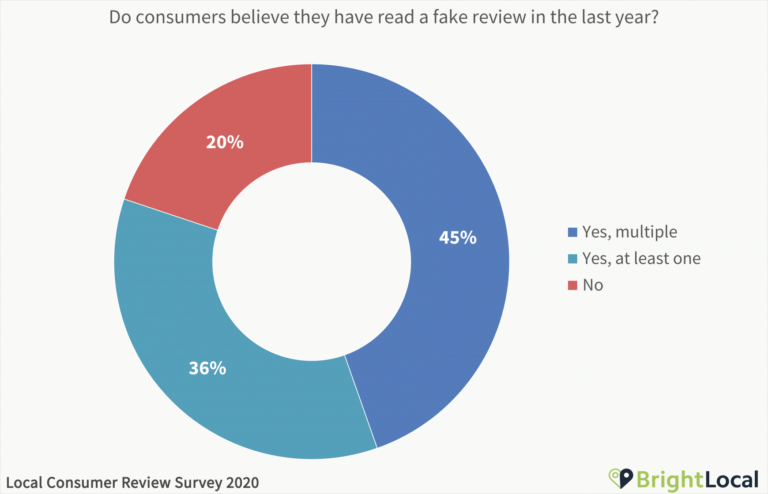

Unfortunately, fake reviews have become more prevalent in recent years. According to research from BrightLocal, four in five shoppers say they’ve encountered a fake review in the last year. One in three have seen multiple.

With the rise in fake reviews — and widespread knowledge of them — it’s essential that brands earn consumer trust by displaying authentic reviews, written by actual customers who have actually purchased and used your products.

But it’s critical to have a solid moderation process in place to ensure this content is, in fact, authentic. The risk is high for brands that don’t, from lost customers to upwards of $43,000 in fines from the FTC.

Does your existing Ratings & Reviews vendor take authenticity seriously?

As we said, authenticity is important. Whichever vendor you work with when it comes to UGC or Ratings and Reviews, you need to be asking questions about how they account for this. (Spoiler alert: At PowerReviews, we take authenticity seriously. Very seriously.)

For example, at PowerReviews, we have an entire Authenticity Policy dedicated to it. There, we state our commitment to collecting, displaying and sharing user-generated content from our clients that is authentic and fraud-free. That means we only publish content that hasn’t been modified, made up, fraudulently collected, or selectively curated.

By screening reviews for authenticity first and foremost, we ensure that any UGC collected and displayed through our platform follows the latest FTC guidelines on endorsements and reviews.

- What is your company’s underlying philosophy on authentic reviews and why?

- What is your step-by-step process to ensure only authentic content makes it to our site?

- What are you doing about the new FTC Guidelines?

- Explain how your fraud detection processes work. Why should I have confidence in them?

- Is all your content moderation automated? How effective is it?

- How is your content moderated? Is there an option to supplement with human moderators or other advanced mechanisms that identify and prevent fake and inauthentic reviews? If not, why not?

So, how do you ensure you promote UGC that’s authentic? And how do you prevent the publishing of fake reviews? It all comes down to content moderation.

A four-step, best practice content moderation process

What does a best-in-class ratings and reviews moderation look like?

First of all, it needs to account for each and every piece of content submitted by your shoppers. Every user-generated review, photo, video, question, or answer — should go through an extensive moderation process to confirm it’s authentic, appropriate, and fraud-free. Each piece of content should also not be altered in any way.

All content that is published should appear as it is originally written, including typos and grammatical errors. This ultimately is critical to authenticity and snuffling out fake reviews.

Step 1: Fraud Detection

Displaying fake reviews directly violates FTC guidelines. So it’s important to keep this content off your website.

To safeguard against this threat, industry-leading fraud technology can analyze the device fingerprint data used to submit a piece of UGC. This data identifies the device ID associated with a review, such as a smartphone or tablet and helps to identify suspicious device or IP information. By using this technology, you’re able to prevent all different types of fraudulent reviews, including spam, duplicate content, and promotional content.

For example, if it detects a large number of reviews coming from a single device, those reviews get flagged for potential fraud. Of course, there is a possibility it’s just a customer who’s particularly zealous about reviewing every single product they bought for Black Friday. Most times, however, this kind of activity indicates fraud. Either way, the tech applies a “fraud” tag to it, flagging it for review – potentially by human moderators (more on them in step 3).

Step 2: Automated Filtering

Beyond fraud, systems can also scan each piece of content through an automated filtering process to catch additional authenticity issues, from profanity to personally identifiable information. When a potential issue is detected, a tag is added to that content so that it can be later reviewed by a human.

Examples of the types of content tagged:

- Content about the wrong product or retailer

- Contradictory content, e.g. a rave review with a 1-star rating

- Duplicate content

- Foreign language

- Medical advice

- Personally identifiable information

- Profanity

- Violent content

- Website URLs

For some tags, such as profanity, the content can be automatically rejected for publication. For others, it can be passed to a human moderation team.

A best-in-class vendor will enable you to add additional words or phrases to the profanity filter. It will also make regular updates to these filters, based on emerging slang terms or political slogans, to ensure nothing profane gets published.

(PowerReviews applies observations tags, which we detail in our Moderation Policy)

Step 3: Human Moderation

For many businesses and Ratings and Reviews vendors, the moderation process stops at step two. But relying solely on technology can leave you vulnerable to some of the exact issues that violate the FTC guideline – like deceptive performance claims, medical advice, and other forms of misrepresentation.

Human moderation adds an additional layer of protection for this very reason. Technology can help prevent fraud and profanity, but humans are better equipped to review for nuance, innuendo, and context.

To meet the high standards for authenticity required to successfully snuff out fake reviews (and not to mention the FTC Guidelines), a moderation process powered by both human and artificial intelligence is ideal.

For example – at PowerReviews, our human moderators review every piece of UGC in our secure portal. All content flagged as an observation by our automatic filtering process will be highlighted, such as profane language or reviews that don’t discuss the products.

Upon reviewing all of the Observations, the moderator will determine whether the review should be published. If a review is rejected for publication, it will not be published and will subsequently not be syndicated (if a brand or retailer using our solution chooses to override this decision, the review will only publish to their site — not to any syndicated retailers).

Also of note: our moderators work on several teams. Some moderators work on all English content, while some review content that needs to follow a specific set of guidelines, such as herbal supplements or financial products (PowerReviews can also facilitate an exclusive moderator to review their content for additional concerns, such as medical advice).

Step 4: Badging and Displaying Content

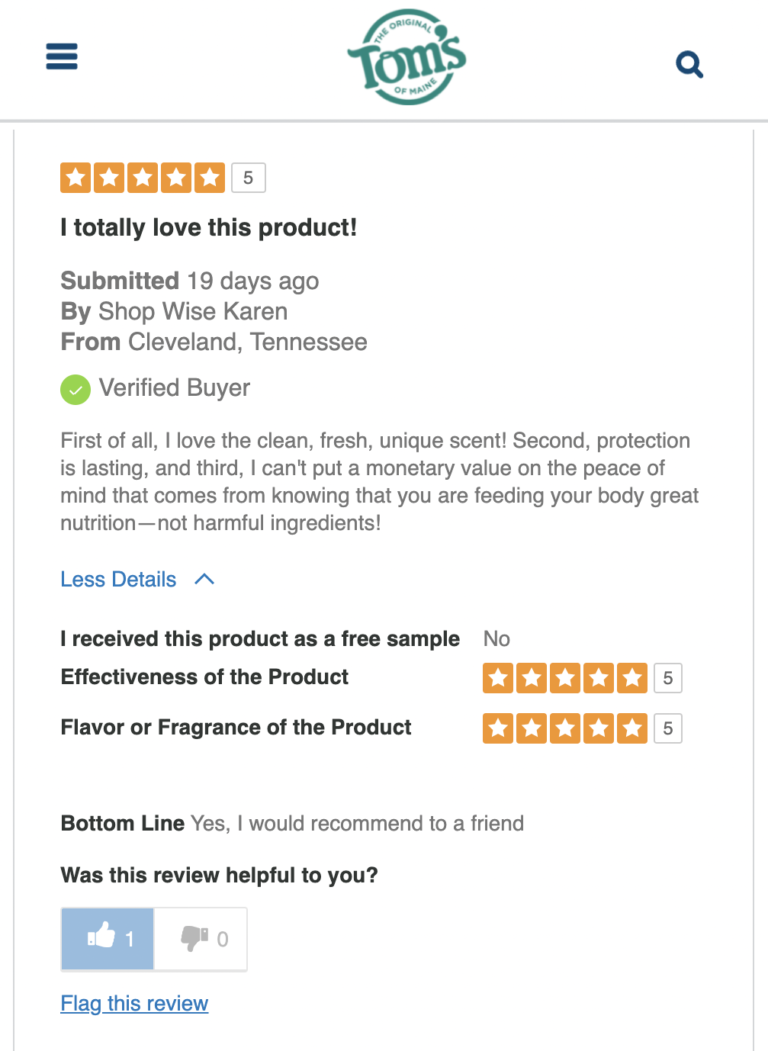

Once a piece of content passes steps 1-3, it’s ready to be displayed on your website. Woohoo! But remember: it’s important to use accurate badging and disclosure codes that indicate the source of the content.

This added layer of transparency shows details such as:

- Where a review came from

- Whether or not it has been verified i.e. written by a person who actually purchased the product

- If it’s been syndicated from another site

- If it was collected via a sampling campaign or was shared as a contest entry

- If the review was submitted by a company employee

PowerReviews offers the following options which serve as a best practice example.

Verified buyers

A “Verified Buyer” badge should be assigned to reviews written by someone who has actually purchased the product they are reviewing, as identified through their purchase history. This builds consumer confidence, and it also ensures you don’t run afoul of Section 5 of the FTC Act, which prohibits “misrepresenting an endorser as an actual, current, or recent user” of a product.

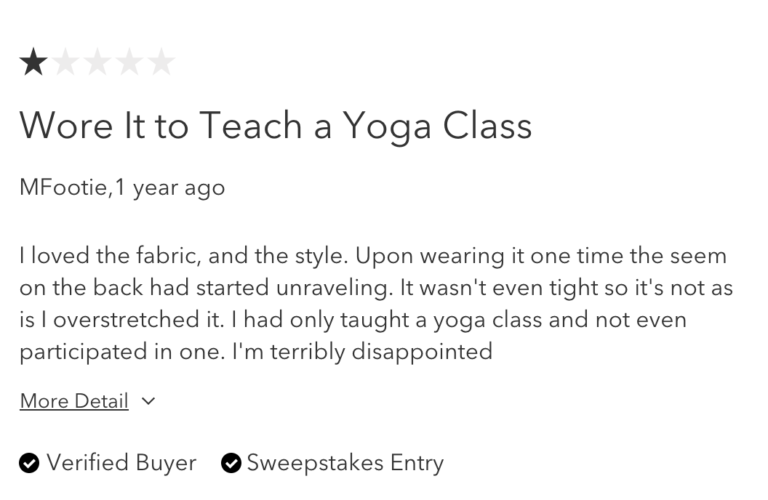

Sweepstakes & product sampling

The FTC also frowns upon failing to disclose whether an endorsement is paid. In the world of influencers, the proper way to do this is by clearly stating that a post or endorsement is sponsored, e.g. with a #sponsored or #ad hashtag at the top of a post.

A review might be considered a paid endorsement if the reviewer received the product for free, such as through a sweepstakes or product sampling campaign. Here are some examples of special badges that identify this type of content:

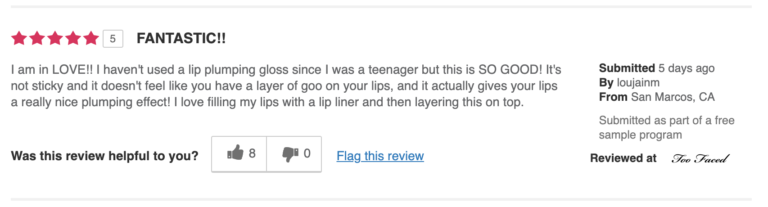

Syndicated reviews

The second example above is also an example of you should badge syndicated reviews. All review content that is syndicated beyond the client’s original website must be badged appropriately (at PowerReviews, we do this by including the name or logo of where the review was originally written – at Too Faced as above, for example).

Staff reviews

Finally, reviews that are written by someone who works for a brand or retailer must also be disclosed, as these people may naturally have a more biased opinion of a brand. For these reviews add a “Staff Reviewer” badge.

It’s important to share as much context as possible about your UGC, as this fosters trust with online shoppers. And in many cases, it’s also required by the FTC (Learn more about PowerReviews Badges).

Preserve the Authenticity of Your UGC Through Moderation

Consumers turn to user-generated content because it’s an authentic, unbiased source of information about products and brands from other people like themselves. Consumers trust UGC.

But simply collecting and displaying UGC isn’t enough. Brands must make it a priority to preserve the authenticity of this content. If you’re relying on a UGC vendor, like most brands and retailers, you need to ensure your UGC vendor has effective and powerful capabilities that prevent fake and inauthentic reviews from sneaking.

Content moderation is an essential tool in this process. By ensuring that every piece of UGC that gets published to your site is authentic, brands and retailers can win the trust of both customers and the FTC.

The multi-step content moderation process at PowerReviews helps retailers publish content that is authentic and meets the FTC’s standards for consumer endorsements. Learn more.